Google I/O 2024: The biggest announcements from AI to Android

AI was all that we could hear.

It's a wrap on Google I/O 2024, and our crew was on the ground experiencing the event firsthand as the tech giant announced a host of Gemini-related features. Even if you didn't make it to Mountain View or missed the live stream, here are the biggest announcements from Google I/O this year. As you may have already heard, this year's event was bolstered with all things AI. Let's dive in!

Did they just say Gemini 1.5 Flash?

Google I/O started with Sundar Pichai introducing a new and improved Gemini 1.5 Flash. As the name suggests, they've worked on bringing in a model that executes tasks more efficiently—rather in a "flash." Google states that Flash can handle "high-volume, high-frequency tasks at scale." Testing this model showed that this AI thrives on summarization, chat applications, image/video captioning, data extraction, tables, and more. Google demonstrated 1.5 Pro's multimodal capability in its AI-powered note-taking app, helping users break down complex information on the go.

Along with Flash, Gemini Nano also got some updates. The model is taking it a step further from just text prompts. Users will soon be able to interact with Gemini using images where it will "understand the world the way people do—through image, video, and spoken language."

Google plans to implement the 1.5 Pro model into the Gemini Advanced and Workspace apps. An upgraded model will arrive for Gmail and NotebookLM in the near future and will be made available in 30 different languages.

Google also gave us a look at "Gemini Live" for Gemini Advanced subscribers. Much like ChatGPT-4o, Gemini Live will allow users to have conversations with the AI model, speak at their own pace, or interrupt mid-response with clarifying questions in real-time.

We then got a glimpse of this when Google revealed Project Astra, a real-time multimodal AI from the Google DeepMind team that looks at your surroundings through a camera and answers questions on the go. You can see how it functions in the video above.

"We would have this universal assistant. It's multimodal; it's with you all the time." "It's that helper," said Demis Hassabis, the head of Google DeepMind, while talking about Project Astra during I/O.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Imagen 3 and Google Veo

With multimodality comes a new wave of image and video generations. According to Google, their new Imagen 3 generates high-quality images and understands the prompt's content and intent. This means that however long the prompt may be, Imagen 3 will generate "photorealistic, lifelike images" with little to no visual noise. This can help users generate more personalized messages, presentations, slides, or fun memes.

Next up is Veo, Google's new AI-powered video generation model that is said to produce 1080p resolution videos in a wide range of cinematic and visual styles that are more than a minute long. Like Imagen 3, this model understands natural language by accurately capturing a prompt's tone and producing videos that closely match the user's needs.

The model is said to understand terms like "timelapse" or "aerial shots of a landscape." Veo creates footage with minimal glitches, with animals and objects moving realistically throughout shots.

Imagen 3 and Veo are only available to select creators via ImageFX and VideoFX. In the future, Google says it will also bring some of Veo's capabilities to YouTube Shorts and other products for customers to test out. But it remains a mystery when we will be able to access this one.

Google search is getting AI-ed

At this year's I/O, Google announced that Search will be getting a number of updates with AI Overviews, which the company first introduced in January.

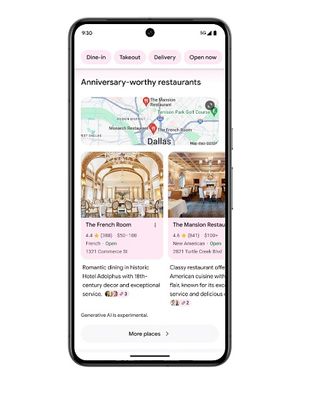

AI Overviews can provide summaries of complex topics right at the top of search results based on the prompt. It will help the users quickly grasp concepts and main points of a specific topic with all the information they find online. With the summary, Overviews will link to its sources so the user can manually crosscheck information if needed.

Additionally, Google says that if you don't find the answers simple enough, an option to "adjust" Overview will pop up, that will simplify information further. This is particularly useful when trying to explain tough school concepts to kids, AI will help with generating examples that they can relate to and grasp those concepts easily.Not just that, Search will use generative AI to help users brainstorm creative ideas and organize the search page better based on the prompt provided.

While much of this is text-based, Google says that with the help of Google Lens, users can start recording a video and vocally ask questions about why something is broken and how to fix it. After that, a search will give you step-by-step instructions on fixing the said object—all in real time.

AI Overviews has rolled out to everyone in the U.S. and will soon be made available to other countries, Google adds.

Android users are in for a major AI boost

It looks like Android and AI go hand in hand, and what's Google I/O without several perks directed toward Android users? Since AI is now at the core of most Android devices and operating systems, here are some new updates discussed at this year's event—specifically catering to Android users. Here are a few of them we can expect to see rolling out in the near future.

To start off, Google is introducing software that enables users to detect unknown phishing/spam calls in real time, alerting users to hang up the call before they give out personal details. This feature could come in handy specifically for older users or children, who might be unaware and get looped into multiple scams doing the rounds.

Android users will soon be able to bring up Gemini on top of any app. With AI integrated into Android phones, users can get creative by generating fun AI images and dropping them into various apps like Gmail and Google messages or creating memes to add to their chats. With "Ask this video" and "Ask this PDF," users can get more information about a YouTube video they're watching or get answers about a PDF without needing to scroll through information pages.

Additionally, Circle to Search will now be able to solve complex math and physics problems by providing a step-by-step process and examples that help the user better understand concepts.

Finally, Gemini Nano with multimodality will give the already existing TalkBack option more "powers." This means that Android phones can process text with a better understanding of sounds, sights, and even spoken language. For instance, when an image of a dress is shared, the TalkBack option will describe the dress's exact color, the length of the sleeves, and other accessories that go with it, helping the user visualize the dress effectively without looking at it.

Gemini Nano with Multimodality will be coming to Pixel phones later this year. However, it remains unclear which models will receive this. We'll have to watch for those monthly updates to see when new features start rolling out.

Welcome to the world of Wear OS 5

Google I/O's day two was more about developer previews, and it gave us a glimpse of the new Wear OS 5. This version of Wear OS will arrive "later this year" and will be based on Android 14. It is said to come with an enhanced battery life, and watch faces will get "Flavors" and exclusive Complications.

Firstly, the new OS will add four advanced running form measurements: Ground Contact Time, Stride Length, Vertical Oscillation, and Vertical Ratio. Wear OS 5 will also have a concept called "debounced goals" for fitness workouts, which lets users start a workout where they must stay within a certain heart rate range or run faster than 10:00/mile pace.

For users who wait for new watch faces to drop, Wear OS 5 will add "Flavors" to watch faces so users can choose between different colors, fonts, complications, and configurations in one menu. Two new WFF complications for Wear OS 5 are "Goal progress" and "Weighted elements." Users can check your percentage towards a fitness target, like step count, or see specific colored portions that add up to 100% (like a pie chart), which sounds similar to the activity rings on an Apple watch.

According to Google, the long-requested weather widget could be in the works. That is said to check current conditions, low and high temps, UV index, chance of rain, and other data set by hour or day.

You can check out the Wear OS 5 Developer Preview at the link.

Android 15 Beta 2 just landed

Google just dropped Android 15 Beta 2, which offers major improvements in productivity, privacy, security, app performance, and battery health.

The newest addition to this Beta is "Private Space," which lets you hide apps in fingerprint-protected space, similar to Samsung's Secure Folder. Private space uses a separate profile, so apps you hide there can't be seen by others or run in the background when locked.

Another update comes for photos, where apps prompt you to give access just to the recent photos and not the entire library, protecting your privacy. Health Connect is expanding its support to include new data types, such as skin temperature and training plans. Skin temperature tracking lets users store and share precise temperature readings from wearables or other devices. Meanwhile, training plans provide structured workout routines to help users meet their fitness goals.

Google is beefing up security in Android 15 by giving users control to prevent malicious apps from operating in the background and bringing other apps to the foreground. This update aims to block malicious apps from gaining privileges and exploiting user interactions.

The latest build is available on select Google Pixel and now on many of your favorite Android phones from brands like Honor, iQOO, Lenovo, Nothing, OnePlus, OPPO, Realme, Sharp, Tecno, Vivo, and Xiaomi.

Lastly, this year's I/O was not as exciting as we expected it to be, as there were virtually no major hardware announcements. We were expecting to see a glimpse of one of the Pixel devices—the Pixel Fold 2 or the Pixel 9. Instead, Google dropped the Pixel 8a and a Pixel Tablet without a dock right before I/O.

We did get some major Pixel 9 leaks ahead of I/O, suggesting that we could see the devices soon. That said, perhaps Google is saving the hardware for the Made by Google event, which is expected to take place later this year in the fall.

Nandika Ravi is an Editor for Android Central. Based in Toronto, after rocking the news scene as a Multimedia Reporter and Editor at Rogers Sports and Media, she now brings her expertise into the Tech ecosystem. When not breaking tech news, you can catch her sipping coffee at cosy cafes, exploring new trails with her boxer dog or leveling up in the gaming universe.